Welcome to Venture Logbook. In our Trend Spotlight, we question if personal AI models will be the next PC, and in Startup Unboxed, we analyze an AI voice scam detection company. We'll also define "fine-tuning" in our Tech Dictionary, giving you the signals for this new era in a 6-8 minute read.

Trend Spotlight

Will personal AI models become as mainstream in the next decade as personal computers did?

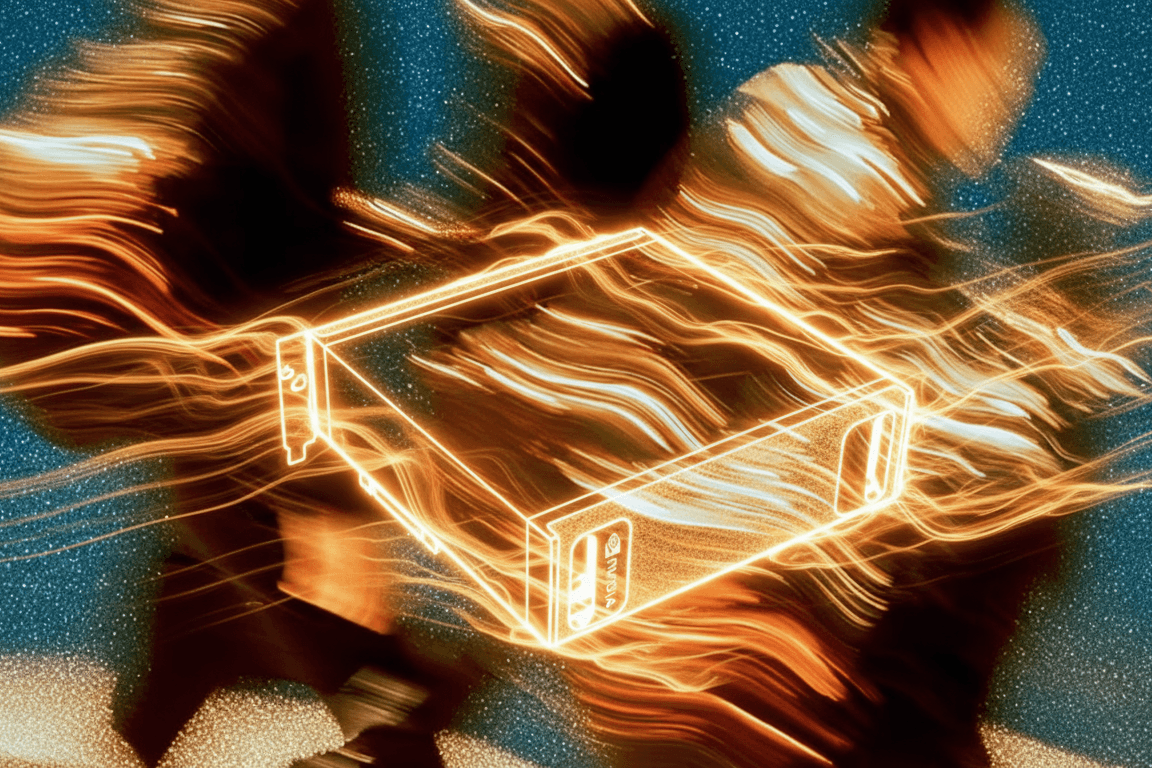

Nvdia just released the DGX Spark, which is powerful enough for anyone to fine-tune their own models at home. I’ve been thinking about this, not so much ‘Will it happen?’ as ‘How long will it take?’

I know some folks wonder: why do we even need on-device model computation? What level of capability should these models actually reach—or not reach? (Do we really need our home models to match Einstein-level intelligence?)

Looking back at how long it took for personal computers and the internet to become household staples, when did every home finally get online? How many years did it take?

From IBM’s System/360 mainframe (1964) to the Apple II (1977); from ARPANET in 1969 to the public launch of the World Wide Web in 1991… these transformations took about 20-30 years. People back then probably wondered, too. Why would a family ever need a computer at home (not to mention the cool option of a laptop)?

Back in the day, if I wanted to win a research competition (yep, I grabbed the championship), I’d use my school’s computer and academic network, they were way faster. Even in grad school, for serious research, I’d still default to the academic network’s speed.

So, whether or not edge models become ubiquitous isn’t the point—it’s really about work patterns and the cost and difficulty of accessing them.

When you compare edge models with private ones (APIs) like OpenAI or Google Gemini, it might feel unnecessary or unmotivating. But as Andrej Karpathy said, this is actually a new OS, and everyone tends to have a favorite OS.

From an operating system perspective, if this truly is an evolution, maybe consumers won’t get to “choose” it—giant OS vendors will compete, but there will always be room for open-source options like Linux.

Are any of today’s unicorns going to become the next Microsoft of this era? When does the paradigm shift become truly obvious?

Notable players in this space (Public/Unicorn/Startup):

- NVIDIA: the obvious leader, will Apple stage a comeback in the era of personal AI PCs?

- Hugging Face : The go-to open-source platform for model downloads.

- Unsloth AI: Building infrastructure to make open-source AI accessible to all.

Startup Unboxed #2

Safety sector is one of my focus areas. This team I met during demo day is building AI voice scam detection and prevention for deepfake risks. They demonstrated how to upload an audio file and detect whether it's a scam, taking 10 seconds. I spent the longest time talking to them and discussing their business model. The reason I focused on this team is that in the future, when AI agents are everywhere and most of calls are from them, preventing scammer robots will be a crucial issue.

Questions I had:

-

The user journey flow needs optimization since people won't upload a file to confirm authenticity, which they are already working on modifying this feature and process.

-

From a business model perspective, who will pay first? End consumers? They mentioned discussions with telecom providers, which shows market attention. The confusing part is the common problem when small companies collaborate with big companies - the big companies don't understand the "new" market and suggest product building strategies unsuitable for startups.

-

When entering a Hair-on-Fire market (Sequoia The Arc Product-Market Fit Framework), you must do something 10x better, either in tech/model/algorithm or product. I asked about their benchmark and evaluation. Does their claimed accuracy work in real-world scenarios, or is it just lab data? What if big companies already controlling telecom solutions develop similar products, or acquire mediocre teams but leverage their distribution?

If I were the team, I would:

Target end-user markets prone to receiving scam calls. Accumulate massive data to train the model better. Focus on fundraising first, then collaborate with big companies later. Focus on one specialized field instead of developing multiple features with different models to avoid divided attention. This would create both a useful and sustainable business model.

🖌 Startup Unboxed is a series that I met startups, journal the takeaway and share my thoughts.

Fine-tuning

Model fine-tuning is a machine learning technique that adapts a pre-trained model to perform better on a specific task by training it further on specialized data. Instead of building an AI model from scratch, you start with a model that already understands general patterns and adjust it to work with your particular use case, industry, or domain.

Fine-tuning is like learning to drive a different car. You already know how to drive—steering, braking, accelerating—but when you get a new vehicle, you adjust to its specific steering sensitivity, brake responsiveness, and blind spots. You're not relearning driving from scratch; you're adapting your existing skills to this particular car.

Explaining to Grandma: It's like teaching a smart helper who already knows how to cook general recipes exactly how you like your family's special dishes made.

🧠 Tech Dictionary helps you decode common tech terms so clearly, even your grandma would get it. Quickly find out what matters & why. So you're never lost in the tech talk.

👋 Thanks for reading! For more tailored insights on venture building in the AI era, subscribe at Venture Logbook.

Disclaimer: This newsletter is for informational purposes only and does not constitute investment advice. All opinions expressed are personal and not financial recommendations.