Welcome to Venture Logbook. With OpenAI pulling back on AI giving advice, we question the future of autonomous systems and the risks for agent-based startups. We then share a reflection on why a founder's "taste" can become their ultimate moat in Startup Unbox and define "Autonomous AI" in our Tech Dictionary, all in a 5-minute read.

Trend Spotlight

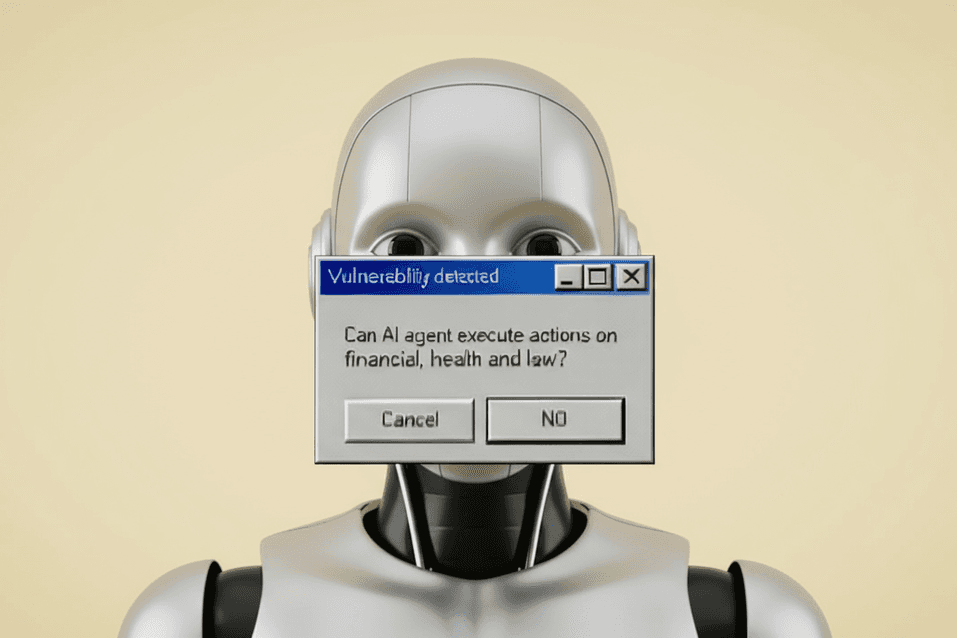

As of October 29, 2025, OpenAI announced that ChatGPT will stop offering specific advice in areas like healthcare, law, and finance. Now, the model will focus on explaining principles and mechanisms, and recommend that users seek guidance from professionals.

OpenAI’s move seems to be aimed at minimizing legal and regulatory risk, repositioning AI as a tool that helps you make sense of the world, but does not make decisions for you.

In a sense, we're hitting the brakes on "level 3" autonomy; the road to a fully autonomous world is now in reverse, bringing us back a step.

Do we even need an AI that can’t provide advice or take action?

Based on this announcement, it looks like not only consultants' jobs are protected (which most people probably support), but it also reinforces the human-AI collaboration known as the “copilot” model.

Does this mean fully autonomous systems will never happen?

From my perspective, the world still hasn’t figured out how to truly work with AI. It's not really about who leads and who follows. It’s a matter of users, regulators, and ultimately, responsibility.

Whenever there’s a big technological breakthrough, these questions keep coming up.They often escalate into ideological debates, forcing tech companies to spend huge amounts on legal, human, and time costs to resolve them. (Think Uber, Airbnb...)

So, given this policy, what lies ahead for AI agents?

If an agent-based company builds actionable systems, it’ll face accountability issues: imagine an agent making a purchase online “with the user’s consent,” and then the user regrets it; or a workflow agent delivers a report to a client, then something goes wrong and the client comes back to the company—who takes responsibility?

I think while technology, society, and regulation are still hashing things out (just look at the major AI players and all their copyright lawsuits...),we don’t yet have a “rulebook” for how to interact with this new kind of entity.

If no big tech company paves a viable path forward, these areas will remain on the sidelines: attractive, risky, potentially a temporary monopoly, but dangerous terrain.

Notable players in Copilot (Health/Law/Finance):

- OpenEvidence: The go-to AI platform for medical answers, powered by evidence-based literature.

- Harvey: AI copilot for law firms, streamlines legal research, drafting, and workflow automation.

- Perplexity Finance: Real-time financial analysis and research using AI.

Startup Unboxed #3

This team I've been advising for a few weeks is high potential, mostly thanks to their leader. Our first meeting lasted nearly two hours, then he got his cofounder on board. I joined multiple sessions to watch them brainstorm, and their ideas sparked off each other.

I love chatting and learning from the next generation, and maybe it's because I spent seven years as a high school math tutor. It's always fascinating to see how younger founders think and handle challenges.

The founder truly stands out, not only because he’s smart but also because he’s clear about his goals and purpose. He’s laser-focused on gathering every possible resource to succeed, which makes you want to work with him, even if that means replying to messages in the middle of the night.

But the thing that I found most compelling about him: he’s highly self-aware and reflective.

He openly shares his thoughts and observations about how other startups operate and seeks feedback—which is the exact problem I spotted but didn’t point out. (Why? Sometimes I prefer not to call out issues immediately; maybe I’m wrong, or maybe I just want to see if it’s really a problem.)

What I learned? Taste really matters, especially for founders.

The whole point is to expose yourself to the best that humanity has created. Draw inspiration, bring it into your own work, and forge a unique taste. If a team doesn’t have taste, whether it’s engineering, product, or user experience, trust me, users notice.

People might not get it right away. But someday, that taste becomes your moat.

🖌 Startup Unboxed is a series that I met startups, journal the takeaway and share my thoughts.

Autonomous AI

A system or machine that can make decisions and act on its own, without human instructions.

Autonomous AI interprets goals, reasons through complex situations, makes decisions, and executes tasks from start to finish. For example, self-driving cars navigate the world and make split-second decisions.

For most people, autonomy feels like the experience of using a robot vacuum. Once you set it up and map your home, the robot decides where to go and what to clean, all by itself. (That’s why I always say the smartest autonomous robot is the robot vacuum!)

Explaining to Grandma: Autonomous AI is like a smart helper that understands what you want and gets the job done without you needing to explain every step.

🧠 Tech Dictionary helps you decode common tech terms so clearly, even your grandma would get it. Quickly find out what matters & why. So you're never lost in the tech talk.

👋 That's all for now! For more tailored insights on the evolving AI landscape and startup world, subscribe at Venture Logbook.

Disclaimer: This newsletter is for informational purposes only and does not constitute financial, legal, or investment advice.